A University of Maryland researcher announced this week that he has created a prototype device using a parallel processor that could very well be the “next generation of personal computers.”

The test model, using technology based on parallel processing on a single chip, is capable of computing at speeds up to 100 times faster than current desktops, said Uzi Vishkin, a professor in the Clark School’s electrical and computer engineering department and the university’s Institute for Advanced Computer Studies.

“The world has been trying to build parallel systems for 40 years,” Vishkin told TechNewsWorld.

All Together Now

Parallel processing enables a computer to perform a wide spectrum of different tasks simultaneously by breaking up any given task so that it is divided among multiple processors. While parallel processing has been used on a colossal scale for years in the creation of supercomputers, this is the first time an analogous technology has been transferred to the desktop, Vishkin said.

Vishkin’s prototype uses a circuit board roughly the size of a license plate onto which 64 parallel processors are mounted. Vishkin, along with a team of researchers, has developed a “crucial” parallel computer organization that allows the processors to work together and also makes programming both practical and simple for software developers, heretofore a problem that has kept multi-core architectures from achieving their full potential in desktop computers.

“The algorithm is really the essence, the idea part of the program,” Vishkin explained. “I realized that people don’t even know how to think algorithmically in parallel. How do you go about designing your algorithms so it will be parallel?”

The new technology is like hiring a team of maids versus a single housekeeper, he said. “Suppose you hire one person to clean your home and it takes five hours, or 300 minutes, for the person to perform each task, one after the other. That’s analogous to the current serial processing method. Now imagine that you have 100 cleaning people who can work on your home at the same time. That’s the parallel processing method.”

The challenge for software developers is creating programs that can manage all the different tasks and workers so the job takes only 3 minutes rather than 300. “Our algorithms make that feasible for general-purpose computing tasks for the first time,” according to Vishkin.

Once in use, the technology could prove a boon for pharmaceutical companies, for instance, enabling researchers to complete computer models on the affects of certain experimental drugs in hours or days rather than weeks or months.

An Evolutionary Step

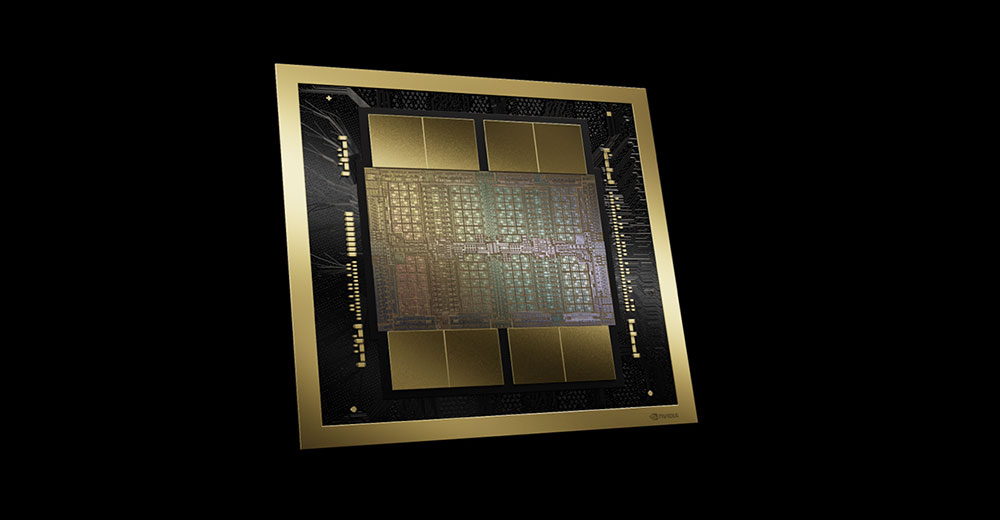

The technology developed by Vishkin is part of a trend among chip makers, such as Broadcom and Nvidia, James Statten, a Forrester Research analyst, told TechNewsWorld.

“They have been determining is it better to do lots of things simultaneously rather than try to do some things in order,” he explained. “And the proof is really coming true that it is much, much more effective to do lots of things simultaneously. And you can do that with lower powered chips that are running at much lower megahertz.”

While the technology has existed in the supercomputing arena for some time, Statten said, it’s new for it to be done on a single chip. The fact that they are putting this many cores on a single chip for the mainstream market is “fairly significant,” he said.

“Everybody is on the same page,” he asserted. “Everybody is moving down, parallel is the way to go. Moore’s Law no longer is fulfilled by processor speed. It is now fulfilled by multi-core. So that is the overall trend.”

However, sizing a supercomputer down to desktop proportions will take more than new hardware, said Tom R. Halfhill, a senior analyst at In-Stat.

“Massively parallel processors with hundreds of cores have been in production for years and are in commercial products today,” he told TechNewsWorld. “However, parallel processing is more difficult to exploit in typical PCs for average users. Some PC applications simply cannot benefit from it. Other application programs must be rewritten from the ground up, which takes time and may require new or improved programming languages.”

Getting the Ball Rolling

The challenge, agreed Statten, is that applications have to be written to take advantage of parallel processing. Currently, there are very few programs, even in the supercomputing space, that are written for this.

“It’s sort of a chicken and egg thing. I can’t write parallel code until I have a processor that can execute parallel code. And so a lot of the applications that have been written that do parallel processing have schedulers that will send the task to multiple separate processors.”

IBM, AMD and Intel are working with universities now that the multi-core chips have started coming out. “You’re starting to get a little ground swell, but for this to really take off in the market, we need these processors available in volume and we need the major software providers to begin building things in parallel.”

It’s an encouraging sign that operating system designers have begun talking about building parallel processing into their programs. Greater availability makes things much simpler for software designers who can take advantage of a parallel processing OS to do some of the stuff that makes parallel processing happen, and they will not have to write the code themselves.

“This is very evolutionary. It’s going to take several years for this to proliferate in the market,” Statten said.