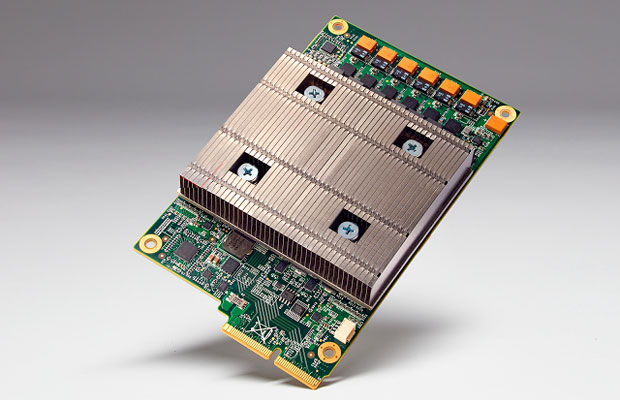

Google last week announced the Tensor Processing Unit, a custom application-specific integrated circuit, at Google I/O.

Built for machine learning applications, TPU has been running in Google’s data centers for more than a year.

Google’s AlphaGo software, whichthrashed an 18-time international Go champion in a match earlier this year, ran on servers using TPUs.

TPU is tailored forTensorFlow, Google’s software library for machine intelligence, which it turned over to theopen source community last year.

Moore Still Rules

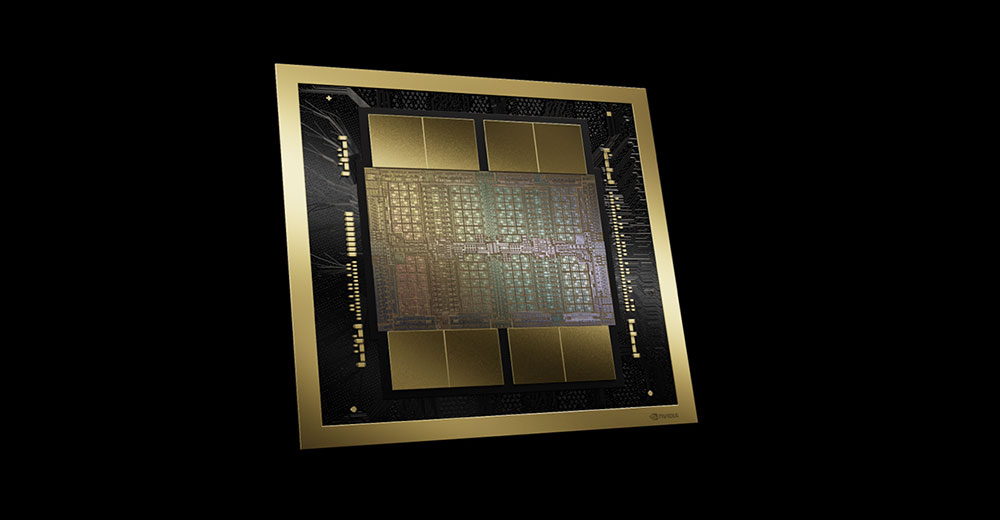

For machine learning, TPUs provide an order-of-magnitude better-optimized performance per watt, Google said. It’s comparable to fast-forwarding technology about seven years — three generations of Moore’s Law.

That claim is misleading, according to Kevin Krewell, a principal analyst at Tirias Research.

“It only works on 8-bit math,” he told TechNewsWorld. “It’s basically like a Z80 microprocessor in that regard. All that talk about it being three generations ahead refers to processors a year ago, so they’re comparing it to 28-nm processors.”

Taiwan Semiconductor Manufacturing reportedly has been working on a 10-nanometer FinFET processor for Apple.

“By stripping out most functions and using only necessary math, Google has a chip that acts as though it was a more complex processor from a couple generations ahead,” Krewell said.

Moore’s law focuses on transistor density and “tends to be tied to parts that are targeted at calculation speed,” pointed out Rob Enderle, principal analyst at the Enderle Group. The TPU “is more focused on calculation efficiency, so it likely won’t push transistor density. I don’t expect it to have any real impact on Moore’s law.”

Still, the board design “has a really big heat sink, so it’s a relatively large processor. If I’m Google and I’m building this custom chip, I’m going to build the biggest one I can put into the power envelope,” Krewell noted.

Potential Impact

“Clearly, hyperscale cloud operators are gradually becoming more vertically integrated, so they move more into designing their own equipment,” said John Dinsdale, chief analyst atSynergy Research Group.

That could “help them strengthen their game,” he told TechNewsWorld.

The processor could make Google “a much stronger player with AI-based products, but ‘could’ and ‘will’ are very different words, and Google has been more the company of ‘could but didn’t’ of late,” Enderle told TechNewsWorld.

The TPU will let Google scale up its query engine significantly, providing for higher-density servers that can simultaneously handle a higher volume of questions, he said. However, Google’s efforts “tend to be underresourced, so it’s unlikely to meet its potential unless that practice changes.”

There Isn’t Only One

The TPU isn’t the first processor designed for machine learning.

Intel’s Xeon Phi processor product line is part of that company’s Scalable System Framework, which aims at bringing machine learning and high-performance computing into the exascale era.

Intel’s aim is to create systems that converge HPC, big data, machine learning and visualization workloads within a common framework that can run in either the cloud or data centers, the latter ranging from smaller workgroup clusters to large supercomputers.

A Case of Overkill?

While the TPU “will have a big effect and impact in data-intensive research, most business problems and tasks can be solved with simpler machine learning approaches,” Francisco Martin, CEO ofBigML, pointed out. “Only a few companies have the volume of data that Google manages.”

Traditionally, custom chips for machine learning algorithms “never turned out to be very successful,” he told TechNewsWorld.

“First, custom architectures require custom development, which makes adoption difficult,” Martin noted. “Second, by Moore’s law, standard chips are going to be more powerful every two years.”

TPU is “tailored to very specific machine learning applications based on Google’s TensorFlow,” he said. Like other deep learning frameworks, it “requires tons of fine-tuning to be useful.” That said, Amazon and Microsoft “will probably need to offer something similar to compete for customers with advanced research projects.”