Facebook on Monday introduced automated alt text, a new tool that describes what’s in an image to help visually impaired users engage more on the site.

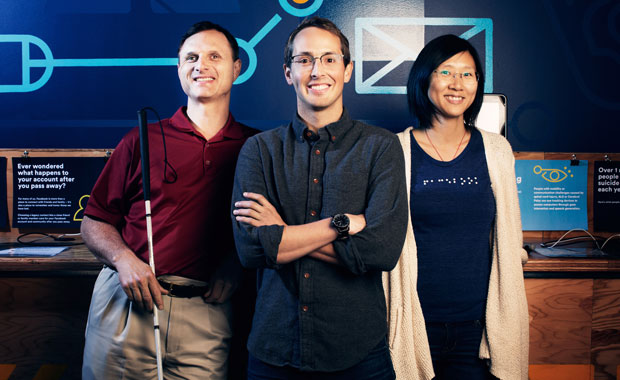

The goal is to help the blind community experience Facebook the same way others enjoy it, according to Facebook’s accessibility team, led by Matt King, Jeff Wieland and Shaomei Wu (pictured above).

People using screen readers on iOS devices will hear a description of the items contained in a photo when they swipe past pictures on a Facebook page.

The descriptions are provided as , an HTML attribute designed for content managers to provide text alternatives for images.

The feature is available in English for iOS users in the United States, the UK, Canada, Australia and New Zealand.

Picture This

The alt attribute is used in HTML and XHTML documents to specify alternative text that will be rendered when the element to which it’s applied can’t be rendered. It also is used by screen reader software.

It was introduced in HTML 2 and in HTML 4.01, and is required for the img and area tags.

Facebook automated alt text for images using object recognition technology. Its computer vision platform, built as part of its work in applied machine learning, provides a visual recognition engine that sees inside images and videos to understand what they show, the company said.

The engine is built on a deep convolutional neural network that contains tools to collect and annotate example images. They are used to train the network. The platform also can learn new visual concepts within minutes and immediately begin detecting them in new photos and videos, according to Facebook.

For the launch, Facebook selected about 100 concepts — objects and scenes such as eyeglasses, nature, vehicles and sports — based on their prominence in photos and the accuracy of the visual recognition engine. The images have specific meanings and aren’t open to interpretation.

Facebook’s object detection algorithm detects these concepts with an accuracy of 80 to 99 percent, the company said.

In the Footsteps of Twitter and Others

Twitter beat Facebook to the punch, announcing last month that it had added the ability for iOS and Android users to add alt text to images in tweets, Susan Schreiner, an analyst at C4 Trends, pointed out.

Businesses also can use the feature, as Twitter has extended its platform products to both the REST API and Twitter Cards.

StartupAipoly offers an app that delivers a multilayered neural network to iOS devices that can understand the device’s camera input and describe the image out loud, Schreiner told TechNewsWorld. The user doesn’t have to snap a picture.

Aipoly’s app can identify hundreds of objects without any training, and can do so three times a second, the company said. It also can understand colors.

It soon will offer the ability to understand complex scenes and the positions of objects within those scenes.

An Android version of Aipoly’s app is in the works.

“There seems to be a general movement towards making technology more accessible,” Schreiner said. “I think people are recognizing the great opportunities. There are close to 250 million people around the world with visual impairments and almost 40 million who are actually blind.”

What the Technology Enables

Facebook’s technology “layers on top of a massive self-learning AI capability that has been advancing incredibly quickly,” remarked Rob Enderle, principal analyst at the Enderle Group. “For instance, this week Nvidia announced a massively improved AI processor and AI server to specifically do work like this and make this kind of capability possible.”

Automating alt text will lead to “massive improvements in real-time indexing of images,” he told TechNewsWorld. It also would allow for more effective hands-free interactions, perhaps while driving.

The technology “could be adapted to seeing prosthetics, helping those that can’t see emulate sight,” Enderle speculated. “This is a big step toward creating more intelligent machines that can be used to help the disabled and to create autonomous machines by enabling far more capable visual recognition systems.”