I’ve worked in and out of security for decades. We did the same kinds of things people do with deepfakes with far older technology when I was working on the switchboard at my friend’s motel when I was a kid. Back then, all we had were hard-wired phones, but we’d often mess with people late at night by pretending to be people we weren’t.

Later, collaborating with headhunters, a common way to find people in companies was to call in and use an executive’s name who was out of town to convince someone illicitly that our external unauthorized request was legitimate. Genuinely good headhunters were extremely good at this. Deepfakes improve the ability to deceive people, but if you are paying attention, there is no reason to be fooled by them.

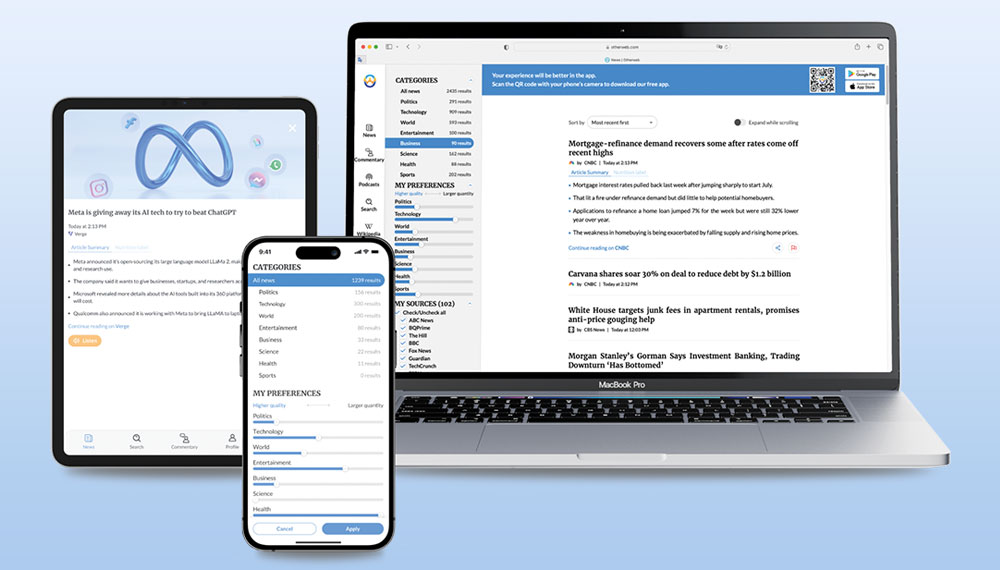

Let’s talk about deepfakes this week, and we’ll close with a news service social media product called Otherweb that does a better job than most at weeding out the fake news that has been a massive source of mistakes, fraud, and embarrassment.

The $25M Deepfake Fraud

What got me thinking about this was a quarterly briefing from HP Wolf Security on security threat trends from the prior quarter and its answer to my question on creative new threats.

HP shared a successful fraud scheme where an employee received calls from two deepfake executives they knew and were convinced to wire $25 million from a financial institution in Hong Kong to an illicit organization. It took two weeks before the fraud was discovered.

I’m an ex-IBM internal auditor, and all kinds of red flags immediately popped up in my head when I read the story. First, financial institutions typically have massive controls over their monetary systems because no one wants to invest money in a financial institution that isn’t safe.

There are a lot of industry rules and practices that have been developed over centuries to protect these institutions. One is called “separation of duties,” where no one person can authorize a significant payment without a physical executive sign-off. When you’re talking about millions of dollars, the expenditure might require the CFO, the CEO, and an independent board member to sign off on it.

Right off the bat, you can see that that process wasn’t in place at this company, and the entity committing the fraud not only knew that but also knew who had the ability to authorize an expenditure like that. This situation strongly suggests an inside job requiring intimate knowledge of the targeted employees’ privileges.

There was no room for failure because if the criminals had tried and failed at this, an alert would have typically gone out inside the company, making other employees aware of the fraud and better able to avoid it.

I have little doubt that the employee who was fooled will become a suspect — since that would be the easiest path — and that other employees who informed the attacking entity of the policy shortfall and identified the tricked or compromised target employee are involved.

Reinstituting and enforcing the “separation of duties” rule to assure that no single employee can authorize an expense like this would significantly reduce the potential for this kind of fraud to be successful and raise the degree of difficulty to a point where a different approach like simply bribing the employee(s) might be more successful.

Kidnapped Loved One Fraud

One of the anticipated frauds is using deepfakes to convince you that a loved one is in danger and that you need to send money immediately to get them out of danger. This tactic has also been used for years over phones with great success. Video might make it a little more believable if done well, but doing these things well is still an unusual skill set, at least for now.

It works by making you panic so that you don’t think to call your loved one or law enforcement as you rush to a store to buy a gift card to send to the individual defrauding you. Realize that in an actual kidnapping, the perpetrator’s safest move to avoid being caught is to kill the kidnapped victim regardless of whether you paid the ransom because if you don’t get your loved one back, what exactly is your recourse?

Therefore, you should always call law enforcement first when dealing with a kidnapping. They have people trained to deal with this, and if engaged, it is far more likely that the perps will be identified and incarcerated. Depending on the size of your local police department, you might want to consider calling the FBI since it may have more substantial resources than your local PD to deal with this kind of fraud.

Develop a safe word that you don’t share outside the family that will establish a kidnapped loved one is who they say they are, so you don’t spin up law enforcement needlessly. You can also call their cell phone to see if they answer. If this is a fake, this is one of the quickest ways to break the fraud.

Giving Voices to Victims

One interesting use of deepfake technology is the voice of a dead child who was killed as a result of gun violence. The technology is called Shotline. It uses the voices very powerfully, I might add, to allow those killed by gun violence to speak out against it. Giving voice to the voiceless is a powerful message to politicians and their supporters who haven’t acted aggressively to stop gun violence.

Granted, this would need to be done with the permission of the deceased child’s parents. I expect that hearing the voice of your dead child speak out against the violence that killed them might be somewhat cathartic for the parents. It also sends a strong message to those who put these kids at risk and should increase empathy for the victims and, particularly, the victims’ parents by politicians who also have kids.

It is one of the most powerful and potentially effective ways to drive needed change. Unlike pushing back on those who promote gun reform, pushing back against the voices of dead children is problematic and possibly a career-ender if constituents identify with the kids or their parents.

This is all to say that deepfakes can also be used to do good.

Wrapping Up

Deepfake technology is being used to do harm, but existing methods designed to reduce or prevent fraud should be adequate to protect against this technology. For politics, we need new laws and ways to enforce them, and some of this, though I doubt it will be adequate, is in the works. Finally, deepfakes can be used for good by giving voice to the voiceless. I expect we’ll see more of this.

When used for evil, deepfakes are just a step up from some of the telephone scams we’ve been dealing with for decades. As long as you approach the related calls with your head up and eyes open, I don’t think the risk is much worse than we had before. After all, how often do political ads tell the truth, even without deepfakes?

With the proper validated and ensured controls and just staying alert to the potential for a well-crafted fraud attempt, we should be able to weather this storm. However, if we are at all compromised or aren’t thinking defensively, we will be increasingly vulnerable to fraud that could change our lives, and not for the better.

Otherweb

Otherweb is a news service social media platform that aggressively filters the junk and fake news out of the feed. You can pick areas of interest, and then you’ll get updates of just the facts without a lot of opinion on the news side of the app. Then, you’ll see and can participate in related discussions on the social media side of the app.

(Image Credit: Otherweb)

You can run the service in Windows, but it tends to work better on a smartphone and gives you a more focused way to stay up to date on current events and at less of a risk of forwarding a fake story to friends, family, or worse, co-workers or management who may change their opinion of you for the worse. This happened to me early in my career, and it took a long time to get over the reputational damage of sharing a fake news story that I believed at the time.

Otherweb’s newest feature is called “Discussions.” It creates a safe social media environment where trolls, instigators, and other toxic sources are aggressively weeded out to make a safe place to discuss current events without the fear of being attacked, swatted, or otherwise punished for your legitimate views and concerns.

I know a lot of us are tired of seeing bad actors, fraudsters, and conspiracy theorists constantly filling our feeds with BS that they have either made up or taken without checking from others. Good decisions fall out of solid information, and fake news is causing many of us to make bad decisions. Getting rid of that influence would be good for all of us. As a result, Otherweb is my Product of the Week. It’s free and worth checking out.