- Welcome Guest

- Sign In

- Welcome Guest

- Sign In

In the ever-evolving world of technology, 2024 brought some exciting innovations alongside an alarming number of trends that expose the pitfalls of our current tech culture.

From overhyped AI gimmicks to privacy erosion and unsustainable hardware practices, here are some of the worst tech trends of 2024 that have frustrated consumers and industry leaders and are unlikely to abate next year.

Generative AI dominated 2023, but by 2024, the trend spiraled into absurdity. Countless companies have rolled out AI-powered tools that address non-existent problems — or create entirely new ones.

AI now generates everything from poorly edited videos and unintelligible blog posts to automatically written emails that require human intervention to fix. Tools that claim to offer productivity boosts often result in inefficiencies because of their flawed outputs.

The flood of low-quality AI products has undermined trust in genuinely helpful AI innovations. Small businesses and consumers alike are overwhelmed by tools with overblown marketing promises.

Many of these deficient AI solutions add another layer of automation without offering real value. This overproduction has created noise, making distinguishing truly transformative tools from mere gimmicks harder.

Former Oracle CEO Larry Ellison once famously said, “Privacy is Dead.” However, privacy has been resurrected and killed more times than a Tyrannosaurus Rex in a “Jurassic Park” sequel.

Digital privacy continues to erode in 2024 as big tech companies push the boundaries of data collection under the guise of personalization. This year, the rise of AI-driven surveillance tools has become particularly concerning. Facial recognition is now integrated into everything from retail stores to public transportation systems without sufficient regulation or oversight.

Hyper-targeted ads across platforms and connected technologies have reached a tipping point. New technologies scrape data from various devices at unprecedented levels, often without users’ consent or precise opt-out options. For instance, smart home devices have increasingly come under fire for tracking conversations and usage patterns far beyond their intended purpose.

Perhaps most worrying is the resurgence of the “we’re improving your experience” excuse. Tech companies increasingly bypass GDPR-like protections by using convoluted terms of service agreements that make opting out prohibitively tricky. This unfortunate phenomenon sets a dangerous precedent for future interactions between consumers and technology.

Most tech users will identify with this trend. In 2024, the “everything-as-a-service” model has reached absurd new heights.

From software to hardware, companies are turning more and more products into monthly subscriptions. Consumers are now paying subscriptions for products that were traditionally one-time purchases: car manufacturers charging for heated seats, printers requiring monthly fees to unlock ink usage, and even smart home locks demanding ongoing payments to access advanced features.

The subscription model has become synonymous with monetizing basic functionality. What started with streaming platforms has now spread to nearly every product category. It has become overwhelming, financially unsustainable, and increasingly frustrating for many consumers. Companies risk alienating their customer base by prioritizing recurring revenue over user experience.

Tech companies have revived a troubling trend of overhyping products that don’t exist in usable forms. This year has been marked by grand promises of game-changing devices and services that either underdeliver or never materialize.

One example is the push for AI PCs, where marketing campaigns tout devices with unmatched capabilities that remain largely theoretical. Similarly, augmented reality (AR) platforms have made headlines, yet most consumers still lack meaningful use cases beyond demo videos and niche applications.

This trend mirrors the vaporware hype of the early 2000s, where buzzwords like “digital transformation” were attached to half-baked products. In 2024, buzzwords such as “quantum-ready” and “AI-powered” are increasingly slapped onto underdeveloped offerings to ride the tech wave, undermining consumer trust.

While I am optimistic about the rise of PCs (both Windows and Mac, x86, Arm or Apple Silicon-based) with integrated AI technology at the silicon level, the jury is still out if mainstream consumers have drunk the AI Kool-Aid.

The unsustainable tech upgrade cycle will worsen in 2025. Major hardware manufacturers continue to push minor annual refreshes of devices while retiring older models earlier than necessary. Smartphones, laptops, and wearables now seem designed for obsolescence, forcing users to replace functional devices far too soon.

This approach has generated alarming levels of electronic waste. Consumers face limited repair options as companies lock down parts and restrict third-party fixes, leading to devices being thrown away rather than repaired. Additionally, the push for disposable devices contradicts the industry’s public commitments to sustainability.

In parallel, new hardware launches often emphasize gimmicky features, like foldable screens or AI-generated wallpapers, that offer little utility. Meanwhile, genuine performance improvements are increasingly incremental, leaving users questioning whether upgrades are worth the cost.

AI surveillance tools have seen rapid adoption, particularly in workplaces and schools. Employers increasingly turn to AI monitoring software to track productivity by analyzing keystrokes, screen activity, and facial expressions. This invasive approach erodes trust between employers and employees while normalizing intrusive surveillance practices.

Similarly, schools have begun implementing AI tools to monitor students’ attention and behavior, often with flawed algorithms. These technologies reinforce punitive environments and disproportionately impact vulnerable communities. Critics argue that such systems prioritize control over genuine engagement or well-being.

Social media algorithms in 2024 have become worse than ever, prioritizing engagement metrics over quality content. Platforms are flooded with clickbait, misinformation, and sensationalized posts designed to keep users scrolling endlessly. Genuine connection — once the core promise of social media — has been replaced by a relentless pursuit of ad revenue.

Adding insult to injury, platforms have ramped up the push for paid verification and algorithmic boosts, forcing creators to pay for visibility. This pay-to-play model exacerbates inequality in content discovery, pushing smaller creators to the margins.

While technology has the potential to improve lives, 2024 has brought forth trends that emphasize profit, surveillance, and short-term gains over long-term innovation and ethical considerations.

From the glut of useless AI tools to worsening e-waste and dystopian surveillance practices, it’s clear that the tech industry needs a course correction.

Consumers, regulators, and innovators alike must push for responsible, meaningful advancement since ignoring it will allow these trends to define the future of technology.

While artificial intelligence has juiced the marketing departments of smartphone makers like Apple and Samsung, it isn’t generating much enthusiasm among users, according to a survey released Monday by a used electronics selling site.

The survey by SellCell of more than 2,000 iPhone and Samsung users found that 73% of iPhone and 87% of Samsung users said that the AI features on their phones added little to no value to their smartphone experience.

Users’ low opinion of the AI on their phones reflects confusion in the market. “While companies are saying ‘now with AI’ or ‘AI included,’ they’re not telling users what to do with it,” said HP Newquist, executive director of The Relayer Group, a business consulting firm in New York City.

“They’re telling users, you now have access to AI. You can now use AI,” he told TechNewsWorld. “They’re just saying, here it is. You’ve got it now. And quite frankly, that’s not a compelling reason to use AI.”

“We’re getting AI thrust at us, and I think consumers are completely nonplussed by that,” he observed.

“We’re finding the same exact thing in corporate America,” he continued. “They’re getting told, you need to use generative AI. You need to use agentic AI. But they’re not being told how specifically it can benefit them. Until that’s made clear both at the consumer and the corporate level, you are going to have a fairly tepid response from first-time users.”

Privacy concerns may be dampening enthusiasm about AI among iPhone users, contended Mark N. Vena, president and principal analyst at SmartTech Research in Las Vegas. “Apple users have high expectations for data protection and skepticism about whether the features offer meaningful improvements beyond what competitors already provide,” he told TechNewsWorld.

“Limited compatibility, with AI features likely restricted to newer iPhone models, may also alienate users of older devices,” he added.

On the Samsung side of things, Vena continued, Galaxy AI lacks differentiation from other Android-based AI offerings, which may reduce excitement. “Samsung’s features might appear incremental rather than groundbreaking.”

“Additionally, inconsistent user experiences with Samsung’s software and AI across devices could contribute to lower enthusiasm, compared to the more tightly integrated Apple ecosystem,” he said.

Greg Sterling, co-founder of Near Media, a market research firm in San Francisco, asserted that one of the central problems with Apple Intelligence is that it’s not well explained or well understood by the public. “Apple needs to do more to educate people about what the features are and when they will be available,” he told TechNewsWorld.

Tim Bajarin, president of Creative Strategies, a technology advisory firm in San Jose, Calif., agreed. “AI integration in smartphones is new and not well understood by the average user,” he told TechNewsWorld. “Google and Apple need to do more tutorial-like posts that show users the new AI features and how to use them.”

“AI requires you to learn how to prompt, and it’s not easy,” added Rob Enderle, president and principal analyst at the Enderle Group, an advisory services firm in Bend, Ore.

“So we have a lot of training in front of us with regard to users knowing how to use this stuff,” he told TechNewsWorld. “I would expect the survey to be bad this early simply because Apple Intelligence hasn’t been available for very long, and people just don’t know how to use it yet.”

Sterling added that the multiple features clustered under the rubric Apple Intelligence are rolling out incrementally over time, so users haven’t really seen the concrete benefits yet. “In a year or two, I suspect this survey would have different outcomes,” he predicted.

Will Kerwin, an equity analyst with Morningstar Research Services in Chicago, also cited the drawn-out rollout of Apple Intelligence as a source of consumer apathy toward AI on their iPhones. “We believe it’ll take consumers time to fully bake in how Apple Intelligence is most useful to them and adapt personal habits,” he told TechNewsWorld.

“This all informs our view that Apple iPhone sales driven by AI will be stronger in fiscal 2026 than they are currently in fiscal 2025,” he said.

Runar Bjørhovde, an analyst with Canalys, a global market research company, added: “The stark reality is that most people don’t buy phones because of AI. They buy because of different features.”

“If we think of the type of features that AI has enabled, they are not that interesting right now,” he told TechNewsWorld.

“It’s honestly not that surprising right now that AI features might disappoint people a bit because they’re not as advanced in reality as some of the marketing and messaging say they are,” he said.

Bjørhovde maintained that many tech firms are having an “existential crisis,” where they’ve lost the huge hype and interest that people have had in them for the last 20 years.

“They have to come up with new stories to try and get people interested,” he contended. “So, AI is a gold mine right now. I believe it can give us some really interesting innovations in a few years. But for now, it is this marketing bubble where people don’t actually know what to believe.”

The SellCell survey also found that about one in six iPhone users (16.8%) said they would consider switching to Samsung if it offered better AI features. In contrast, only 9.7% of Samsung users said they’d consider moving to Apple for better AI features.

It added that the percentage of users loyal to Apple has declined from 92% in 2021 to 78.9% now. That compares to a decline from 74% to 67.2% over the same period for Samsung.

“In general, the excitement around Apple’s annual upgrade cycle has declined a lot,” said Ross Rubin, the principal analyst at Reticle Research, a consumer technology advisory firm in New York City.

“These AI features are an attempt to inject something new and exciting into the experience,” he told TechNewsWorld. “But consumers are looking for a baseline of functionality and don’t think the platform is as much of an issue anymore.”

Still, the finding that so many Apple users might be willing to jump ship for AI is surprising, he acknowledged. “Apple users just tend to be far more likely to opt into Apple services,” he explained. “Because of the App Store investments, you can’t necessarily move all that stuff to another platform. So that makes the reported greater willingness to switch surprising.”

However, not everyone sees Apple’s fan base as waning. “We don’t see brand loyalty slipping in our surveys,” Bajarin declared. “We expect Apple to have a blockbuster holiday season, with iPhone sales and drawing many ‘switchers’ to the Apple ecosystem.”

“We also don’t think loyalty to Apple is going away,” Kerwin added. “In our view, iPhone users are significantly likely to remain iPhone users, and AI features are just another means of locking them into Apple’s ecosystem.”

Many amazing products launched this year, and we’ll cover some of them. However, unlike most years when the decision was difficult, one product stood out well above the rest as revolutionary. What made it particularly special was that it was a bold bet that paid off and helped its manufacturer become one of, and for a time, the most valuable company in the world.

Typically, I don’t do a Product of the Week for this column as it focuses on the Product of the Year, but I ran into a confection that I’ve become almost addicted to, thanks to my love of dark chocolate, so I’m compelled to squeeze it in. It’s from a small company called Sweet as Fudge. So, before we revisit 2024 and discuss some of the standout products, let’s talk about this chocolate.

The product is Dark Chocolate Triple-Dipped Malt Balls. There’s also a sugar-free version that’s surprisingly good. A pound costs around $15, but these things are so good. I keep them in the refrigerator so they melt more slowly in my mouth. If you are into chocolate and like malt (chocolate malt is my go-to drink in the summer), give them a try this holiday season.

Now, on to the main event. Let’s begin with the contenders that didn’t quite make the top of my list and conclude with the one that earned the title of Product of the Year.

Image Credit: Fisker

It’s a shame this company didn’t survive the year, but the Fisker Ocean — which I had planned to buy — was arguably the best electric SUV on paper. It had a bunch of cool, unique features like a solar panel roof and a pull-up table so the driver could enjoy a snack or meal in the car without making a mess.

This eSUV was well-designed and had decent range, and I thought it was one of the best-looking vehicles in the market. Operating inefficiencies and apparently some financial mismanagement killed the company and the car. RIP Fisker.

Image Credit: Audi Communications

I bought a used 2022 Audi e-tron GT after Fisker went under. Right after I bought it, Audi released the 2025 model, and it is a beast. It has nearly 1,000 HP, giving it blazing performance (more than I need as my base car is quick enough), hydraulic suspension allowing it to adjust its height nearly instantly, and the car is beautiful.

Oh, they added around 100 miles of range over my car, making it far more useful for a daily driver. At $171,000, it is out of my budget range, but wow, what a car!

Image Credit: Hyundai

I think Hyundai’s approach with the Ioniq 5 N electric car is the way to go for those who miss the engine sound and gear shifting in a manual gas car. This Hyundai is arguably the most fun electric car in the world. It does a decent job faking engine sounds, and shifting feels like you are shifting a car — even though this is emulated and actually makes the car slower. But it is incredibly fun to drive and reasonably quick (0 to 60 in 3.25 seconds).

The Hyundai Ioniq 5 N isn’t badly priced for a performance car that lists under $70,000, and it’s likely quicker than your buddy’s far more expensive exotic car.

Wow, talk about an offering that changed the world! ChatGPT is the core technology under both Apple and Microsoft’s AI efforts. Its Dall-E capability for images is impressive (as is Google’s Gemini, which I also use), and it has changed the way many of us write software, create images, and even write complete works.

ChatGPT has been advancing incredibly quickly, and with artificial general intelligence (AGI) it promises to change how we create what we read. ChatGPT and its peers are starting to change what we watch. It is a truly revolutionary platform, though Google’s Gemini Advanced is no slouch either. While ChatGPT launched before 2024, it became the basis for both Microsoft’s and Apple’s huge AI efforts this year.

Ever since the PC came out, we’ve essentially been asking to go back to terminals. We love the freedom the PC gives us, but we aren’t crazy about the complexity of supporting them ourselves, particularly at scale. Microsoft helped create the problem, and, to be fair, it has mitigated a lot of the related pain by improving Windows.

However, Windows 365 Link is a nearly instant, on-terminal-like device that promises and mostly delivers Windows capabilities with the reliability and simplicity of a terminal. It’s kind of a “Back to the Future” product and a bit of a man-bites-dog story. I expect this to be the future of the PC eventually.

Ever since Elon Musk bought Twitter, many of us have been looking for an alternative. Each time we got excited about a new effort, we were disappointed.

Bluesky has been the closest thing to a better Twitter so far, and more and more people I know have migrated to it. Given its distributed architecture, it also has some technical advantages over X/Twitter.

Its moderation, in my opinion, appears to be better than Twitter’s. It was a huge step in the right direction, and I hope it makes it into our future.

The Google Pixel 9 Pro Fold is now the phone I carry, and it is awesome. The updates to the phone have been helpful. It locks if it moves suddenly, as if someone stole it, for instance. It also locks if you are reading in a plane when it takes off, but you can just log in again. It folds out into a small tablet, so if I don’t have my glasses handy, I can still make the text large enough to read. Speaking of reading, it is the best e-book alternative since the discontinued Microsoft Duo.

I wish Google had used a Qualcomm Snapdragon instead of its own processor, but the difference isn’t as bad as it was with the first Fold. I’m just loving this phone.

Huawei has demonstrated remarkable ingenuity by navigating technological restrictions to deliver one of the most desirable smartphones in the world, despite some initial growing pains because this was almost an entirely new platform.

Given that Huawei wasn’t allowed to buy the technology from the U.S., this three-screen phone that went from iPhone to iPad size is a technical marvel. It showcases where we will likely go with phones and set itself apart from competitive devices this year.

Huawei remains impressive, considering the problems posed by the conflicts between the U.S. and China this year. Impressive work.

Lenovo is another company that stood out this year in terms of sheer innovation. The product that most caught my eye was the ThinkBook Auto-Twist AI PC Concept.

What makes this PC different is that it automatically pans the screen and on-board camera based on where you are in the room. This feature is particularly handy for those of us who have to move around during team calls or when you need to watch a how-to video while doing something else.

Watching that screen swivel automatically so it remained focused on the user was awesome. As AI advances, I expect we’ll see even more innovation when it comes to AI PCs.

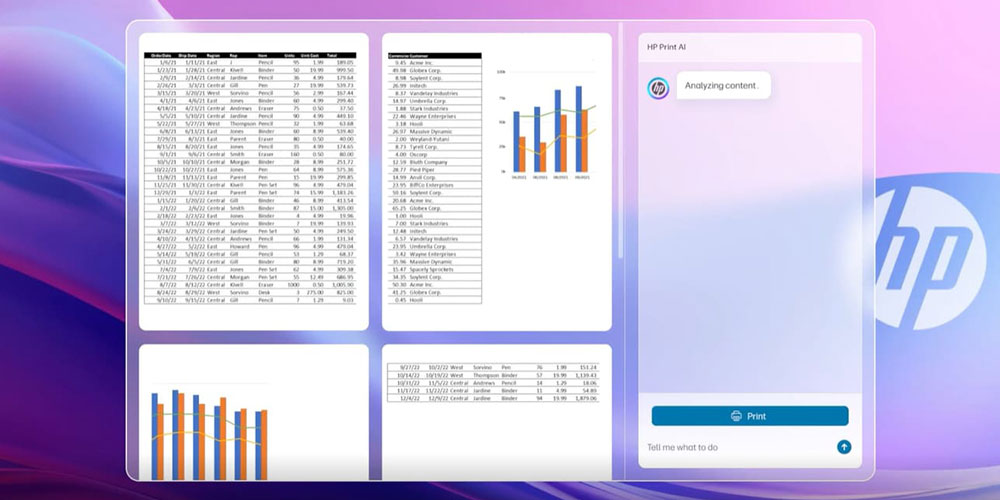

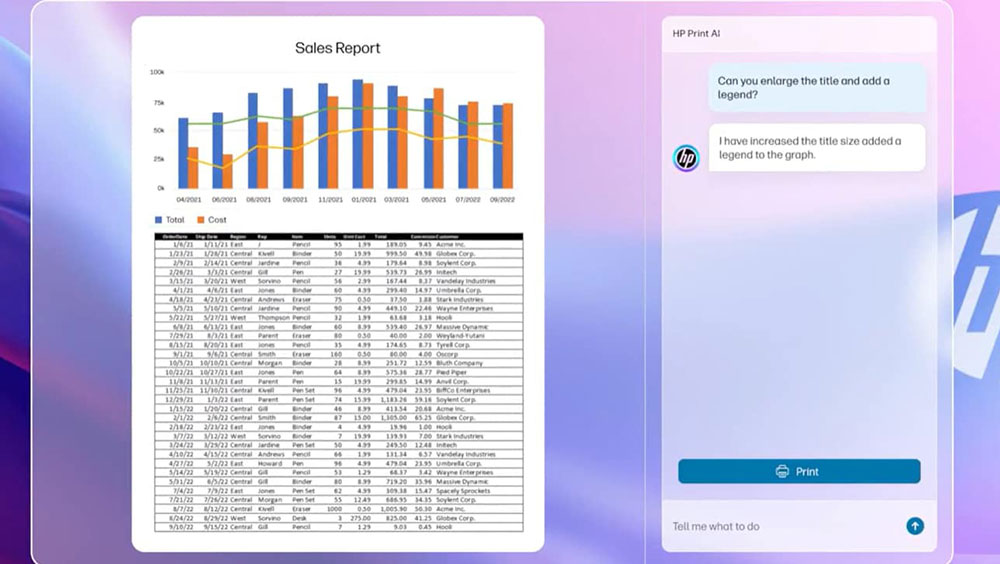

For me, 2024 was defined by AI innovations. The HP Print AI offering, released in September, directly addresses a lot of our aggravation with printers, automatically formatting things for pages without cutting off the borders and positioning the data and graphics so that each print job is perfect.

We’ve all had issues when printing a document and not having it lay up properly. Spreadsheets are the worst, often printing one line or column on a page and then kicking out hundreds of useless print copies that make little or no sense, like this:

Image Credit: HP

HP Print AI will auto-format print jobs, so the printed document is useful. The AI analyzes your print job based on past training to determine the optimal format. Then, it auto-configures for that result so each print job is as perfect as the AI can make it, like this:

Image Credit: HP

Printer technology needs to be moved into this decade, and this software from HP should do that. Expect to see more advances like this from many companies that plan to use AI to address customer frustrations with their products.

The BDX robots are part of Project Groot and use Nvidia’s Jetson robotic technology. You’ll see them at Disney parks, and eventually, you’ll be able to buy one.

This little guy is the closest to a real “Star Wars”-like robot I’ve ever found. I wish I’d been smart enough to invest in Lucas Films back then rather than spending my money on going to that first “Star Wars” movie over and over and over again. BDX showcased how far robotics has come, and we’ll be seeing a lot of amazing robots you can buy in 2025.

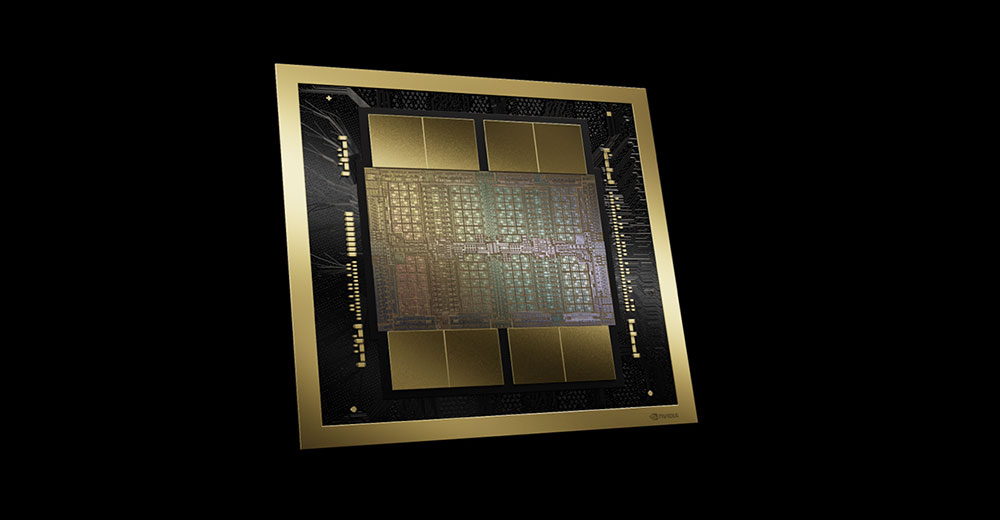

Image Credit: Nvidia

When Nvidia CEO Jensen Huang first presented Blackwell, it was a fantastic event that showcased how a company should release a revolutionary product. This GPU is massive in terms of performance and power requirements, and it is forcing a gigantic pivot from air to water cooling in cloud and enterprise data centers as the world pivots to AI.

The backstory on this part and Nvidia’s entire AI effort is a tale of legend. Back in the early 2000s, when only IBM was really working with AI, Jensen Huang became convinced it was a much more near-term future event. For much of the next 20 years, Nvidia’s financial performance dragged as the company basically bet its future on AI. Had Huang not been a founder, he likely would have been fired.

Then OpenAI asked for help, and Nvidia provided it. Thanks to Nvidia, we now have generative AI that works. Blackwell is the current culmination of this work, a massive GPU that is extremely power-hungry but also incredibly efficient. While it uses a ton of power, it does much more work than any group of other GPUs or NPUs can do with that same power.

AMD’s Threadripper CPU was equally innovative, but it was designed for existing market needs. Nvidia was working on Blackwell before AI became a market, and that’s just never done. By taking what seemed to be an unreasonable risk and executing on it, Nvidia caught its competitors sleeping, and now Nvidia is nearly synonymous with AI.

Back when there were pensions, CEO compensation was more reasonable, and boards more supportive of long-term strategic moves, an effort like this wouldn’t have been that unusual. But in today’s world, you just don’t see that.

Nvidia’s Blackwell effort gave me hope that the U.S. might be able to return to a more strategic future. It caught the imagination of a world increasingly focused on and concerned about AI. Blackwell was a once-in-a-generation leap in performance and a massive bet that could have gone badly, so it is my choice for Product of the Year.

As a lifelong New York Giants fan, it’s been hard to suffer through the 2024 season, culminating last weekend in the Giants’ most recent debacle, losing to the below-average New Orleans Saints on a botched field goal in the last seconds of the game.

In my disgust in the aftermath of the game, it occurred to me: Is present-day Intel the equivalent of the 2024 Giants? It sounds like a ridiculous question, but the similarities are eerie.

Let’s face it: The two titans of their industries — the New York Giants in professional football and Intel in technology — have struggled through severe scrutiny and poor performance the past few years. Both were once at the top of their fields, making headlines and defining periods, so it’s easy to draw analogies between them.

For the Giants, simply beyond the sheer shoddiness of the on-field performance over the past few years (the team hasn’t been to the Super Bowl since 2012), management made one of the most idiotic decisions of all time before the season began by extending a questionable long-term contract to “franchise” quarterback Daniel Jones and allowing Saquon Barkley to sign with its divisional rival, the Philadelphia Eagles. Now, Barkley is having one of the greatest seasons of all time for a running back.

As for Intel, the company has struggled to maintain market share in the PC space over the past few years, conceded the smartphone space after it passed over Apple’s request for a suitable silicon solution for its iPhone in 2007 (which would have ecosystem ramifications that Apple has taken advantage of), not to mention missing the overall industry movement to Arm-based architectures for mobile devices and even laptops.

Both organizations are currently under fire for their (at least perceived) inability to give fans and customers a modicum of faith that turnarounds were in the making. Although there are similarities between their difficulties, a deeper examination shows that Intel’s problems are essentially distinct from the New York Giants’ of 2024 and are being addressed in a way that distinguishes the company from them.

The New York Giants, a legendary NFL team that has won four Super Bowls, was under tremendous pressure going into the 2024 campaign.

Recent years have been characterized by inconsistent play, dubious coaching choices, and poor player development. In today’s NFL, the team has had difficulty adjusting when creative play-calling and analytics-driven tactics are paramount.

The Giants have mostly failed to take advantage of their chances despite brief flashes of potential, which has created discouraged supporters and experts doubtful of their prospects, as well as exasperating season ticket holders like me.

Intel used to be the undisputed leader in its industry. The company literally controlled the semiconductor market for many years, establishing the chip innovation and performance benchmark. But a slew of upheavals in the 2020s put its hegemony in jeopardy. The emergence of rivals like AMD and Nvidia and the advanced manufacturing technology pioneered by Taiwan Semiconductor have compelled Intel to confront its weaknesses.

The leading cause of Intel’s problems is the company’s delay in switching to sophisticated manufacturing nodes. Due to setbacks with its 10nm and 7nm nodes, Intel lost market share in essential categories, while Taiwan Semiconductor and Samsung advanced with their state-of-the-art 5nm and 3nm processes. These challenges were exacerbated by the increasing use of Arm-based architectures, especially in AI and mobile applications, where Intel’s x86 architecture has struggled to stay competitive.

Although the Giants and Intel face formidable obstacles, their responses distinguish them. The Giants have frequently seemed hapless, switching quarterbacks and coaches in an attempt to find a short-term solution. Due to their inability to develop a clear plan of action, fans and experts are beginning to doubt the franchise’s long-term survival.

In contrast, Intel has attempted to take serious action to overcome its obstacles. Under the direction of CEO Pat Gelsinger, the company launched a daring plan to regain its place at the forefront of the semiconductor industry.

The core of this endeavor is Intel’s IDM 2.0 strategy, which aims to increase its role as a foundry for third-party clients while modernizing its manufacturing capabilities. By doing this, Intel hopes to take on Taiwan Semiconductor and Samsung head-to-head as a manufacturing giant and chip designer.

Additionally, Intel has increased its focus on cutting-edge technologies. Its attempts to create specialized chips for data centers and its investments in AI-specific hardware, such as the Gaudi AI accelerators, demonstrate a proactive approach to the upcoming wave of computing innovation. In fairness to Intel, these actions have revealed a business willing to own up to its mistakes while working to influence the future rather than merely responding to it.

An organization’s ability to overcome hardships is largely dependent on its leadership. With numerous coaching staff changes and a front office that frequently appears out of step with the team’s demands, the Giants have had difficulty establishing a permanent leadership structure. This unpredictability has led to a lack of direction and identity on the field. Watch any of the Giants’ losses over the past few seasons, and it’s hard to dispute this.

In contrast, Intel enjoyed reasonable unity and support when Pat Gelsinger rejoined the company. Gelsinger prioritized a return to Intel’s engineering foundation while cultivating an innovative and accountable culture. Ambitious aims and a willingness to take chances characterized his tenure, which contrasts sharply with the Giants’ more cautious strategy.

The Giants and Intel are both burdened by their histories. The Giants’ rich past makes them feel both proud and burdened, which makes their recent setbacks even more disappointing. Because of the team’s illustrious background, supporters find it challenging to make sense of its current hardships in light of its former success.

Being a pioneer in its industry comes with expectations, which Intel also struggles with. The impact of the company’s errors is exacerbated by its standing as a technology innovator. However, Intel’s heritage offers distinct advantages, including a wealth of technical know-how, solid industry ties, and a still enviable reputation, especially with legacy PC OEMs like HP, Dell, and Lenovo. These resources have put Intel in a position to build on its prior achievements and focus on future expansion.

The timelines of their various sectors represent one of the most considerable distinctions between Intel and the Giants. NFL teams follow an annual cycle, and their fortunes frequently fluctuate depending on how one season turns out. Failures are front-page news, and because of their immediacy, it has become challenging for the Giants to bounce back from in the near future.

Timelines are lengthier in the tech sector, though. Years pass during semiconductor development cycles, and strategic choices cannot have their full effects for ten years.

Intel has more time to accomplish its ambitions and bounce back from setbacks because of this longer horizon. While Intel’s problems have been more gradual and (in theory) allow for course correction and progressive development, Wall Street is typically not patient, and investors get nervous when they don’t sense positive signs of leading indicators like market share gains and revenue increases.

Despite its struggles, Intel is not a business that is content to let things go. Intel is setting itself up for a long-term resurgence with its IDM 2.0 strategy, AI initiatives, and redoubled emphasis on silicon excellence.

Some now contend that Intel will never regain its position as the semiconductor industry leader, and its issues are so complicated that they might not be resolved. Due to the company’s manufacturing delays, AMD and Nvidia have increased market share, further widening the gap as Intel prepares for the 18A production phase.

Furthermore, Intel’s foundry sector has had trouble attracting customers, which has made its recovery attempts more difficult. Pat Gelsinger’s resignation highlights the need for strong leadership and creative ideas after his tenure saw a significant drop in stock value. Restoring investor trust and industry stature will need strategic restructuring and a fresh emphasis on execution, which will be highly challenging due to internal resistance to whoever takes over as Intel’s leader.

It’s easy to forget that many analysts welcomed Gelsinger’s return to Intel in 2021 with hope because they thought his familiarity with the firm, his grasp of the silicon industry, his focus on customers, and his visionary attributes were precisely what was required to turn the giant around.

However, under his direction, Intel had endeavored to overcome several obstacles, such as a lag in manufacturing improvements and heightened competition from rivals like AMD and Nvidia. Due to these problems, Intel’s stock value significantly dropped, wiping out almost $150 billion in market capitalization.

Although some have claimed that Gelsinger just needed more time to carry out his plan effectively, the company’s board thought differently and finally decided that a drastic change in direction, starting with a change in CEO, was required.

Despite being interesting and even amusing, the connection between Intel and the 2024 New York Giants ultimately falls short considering all that.

Even if both organizations are going through difficult times, Intel’s approach shows a degree of strategic vision and flexibility that the Giants have not yet shown. Intel is building the foundation for a future that solidifies its position as a leader in the technology industry, not just battling to remain relevant. If Intel is a behemoth, it is undergoing reinvention rather than decline, which it must do if the company is to grow.

There are reasons to be optimistic for Intel. Its Lunar Lake family of processors is showing favorable performance and battery life comparisons to Apple Silicon and even offerings from Qualcomm, which has made a great deal of favorable news with its Snapdragon Elite solutions for laptops.

Intel’s incoming CEO, whoever that might be, will have one of the greatest corporate turnaround challenges in tech history. The company will have to dramatically cut headcount, which makes Intel’s cut of 15,000 people earlier in the year look like a pinprick.

Intel seems committed to its foundry strategy, which will require years of investment before it yields significant returns. In a post-Biden Administration world, the company may be unable to rely on the federal government for further investment in its foundry business. To top all of that, some customers may not be comfortable with Intel’s “church and state” strategy of manufacturing non-Intel chips in Intel factories.

Intel’s chances for success will largely depend on its new leader. I advise hiring someone from the outside who is not an Intel insider who might be influenced by legacy Intel personnel who have developed a survival mentality and are reluctant to take risks. Intel’s new CEO will likely be the most-watched tech hire of 2025, as their leadership will provide critical insights into the company’s future.

The new CEO will also have to deal with a management team who have remained the many cuts the company has gone through and might be unwilling to make the necessary changes Intel must undertake, as legacy management will be in “survivor” mode and unlikely to take risks.

As for the Giants, I’m horrified to state that I’m not optimistic. For the first time in my 46 years as a season ticket holder (shelling out over $200,000 during that period), I’m contemplating giving them up. Or maybe I’ll just play Madden 2025 on my Xbox One for the remainder of the season and not waste my team watching Big Blue suffer.

Fortunately for Intel, it is not at that point. The company controls its destiny, but time is not on its side, so its incoming CEO must show results quickly and tangibly.

Some software developers disagree with the open-source community on licensing and compliance issues, arguing that the community needs to redefine what constitutes free open-source code.

The term “open washing” has emerged, referring to what some industry experts claim is the practice of AI companies misusing the “open source” label. As the artificial intelligence rush intensifies, efforts to redefine terms for AI processes have only added to the confusion.

Recent accusations that Meta “open washed” the description of its Llama AI model as true open source fueled the latest volley in the technical confrontation. Some in the industry, like Ann Schlemmer, CEO of open-source database firm Percona, have suggested that open-source licensing be replaced with a “fair source” designation.

Schlemmer, a strong advocate for adherence to open-source principles, expressed concern over the potential misuse of open-source terminology. She wants clear definitions and guardrails for AI’s inclusion in open source that align with understanding the core principles of open-source software.

“What does open-source software mean when it comes to AI models? [It refers to] the code is available, here’s the licensing, and here’s what you can do with it. Then we are piling on AI,” she told LinuxInsider.

The use of AI data is being mixed in as if it were software, which is where the confusion within the industry originates.

“Well, the data is not the software. Data is data. There are already privacy laws to regulate that use,” she added.

The Open Source Initiative (OSI) released an updated definition for open-source AI systems on Oct. 28, encouraging organizations to do more instead of slapping the “open source” term on AI work. OSI is a California-based public benefit corporation that promotes open source worldwide.

In a published interview elsewhere, OSI’s Executive Director Stefano Maffulli said that Meta’s labeling of the Llama foundation model as open source confuses users and pollutes the open-source concept. This action occurs as governments and agencies, including the European Union, increasingly support open-source software.

In response, OSI issued the first version of Open Source AI Definition 1.0 (OSAID) to define what qualifies as open-source software more explicitly. The document follows a year-long global community design process. It offers a standard for community-led, open, and public evaluations to validate whether an AI system can be deemed open-source AI.

“The co-design process that led to version 1.0 of the Open Source AI Definition was well-developed, thorough, inclusive, and fair,” said Carlo Piana, OSI board chair, in the press release.

The new definition requires open source models to provide enough information to enable a skilled person to use training data to recreate a substantially equivalent system using the same or similar data, noted Ayah Bdeir, lead for AI strategy at Mozilla, in the OSI announcement.

“[It] goes further than what many proprietary or ostensibly open source models do today,” she said. “This is the starting point to addressing the complexities of how AI training data should be treated, acknowledging the challenges of sharing full datasets while working to make open datasets a more commonplace part of the AI ecosystem.”

The text of the OSAID v.1.0 and a partial list of the global stakeholders endorsing the definition are available on the OSI website.

Schlemmer, who did not participate in writing OSI’s open-source definition, said she and others have concerns about the OSI content. OSAID does not resolve all the issues, she contended, and some content needs to be backtracked.

“Clearly, this is not said and done right, even by their own admitting. That reception has been overwhelming, but not in the positive sense,” Schlemmer added.

She compared the growing practice of loosely referring to something as an open-source product to what occurs in other industries. For example, the food industry uses the words “organic” or “natural” to suggest an assumption of a product’s contents or benefit to consumers.

“How much [of labeling a software product open source] is a marketing ploy?” she questioned.

Open-source supporters often boast about how the technology is deployed globally. Only rarely is an issue cited about license enforcement issues.

Schlemmer admitted that economic pressures drive changes in open-source licenses. It often becomes a balancing act between sharing free open-source code and monetizing software development.

For example, companies like MongoDB, her own Percona, and Elastic have adapted their licensing strategies to balance commercial interests with open-source principles. In these cases, license violations or enforcement were not involved.

“Several tools exist in the ecosystem, and compliance groups in corporate departments help people be compliant. Particularly in the larger organizations, there are frameworks,” said Schlemmer.

Individual developers may not recognize all those nuances. However, many license changes are based on determining the economic value of the project’s original owner.

Schlemmer is optimistic about the future of open source. Developers can build upon open-source code without violating licenses. However, changes in licensing can limit their ability to monetize.

These concerns highlight the potential erosion of open-source adoption due to license changes and the need for ongoing vigilance. She cautioned that it will take continuous evolution of open-source licensing and adaptation to new technologies and market pressures to resolve lingering issues.

“We must keep going back to the core tenet of open-source software and be very clear as to what that means and doesn’t mean,” Schlemmer recommended. “What problem are we trying to solve as technology evolves?”

Some of those challenges have already been addressed, she added. We have a framework for the open-source definition with clear labels and licenses.

“So, what’s this new concept? Why does what we already have no longer apply when we reference back?”

That is what needs to be aligned.

Google announced a major step forward in the development of a commercial quantum computer on Tuesday, releasing test results for its Willow quantum chip.

Those results show that the more qubits Google used in Willow, the more errors it reduced and the more quantum the system became.

“Google’s achievement in quantum error correction is a significant milestone toward practical quantum computing,” said Florian Neukart, chief product officer at Terra Quantum, a developer of quantum algorithms, computing solutions, and security applications, in Saint Gallen, Switzerland.

“It addresses one of the largest hurdles — maintaining coherence and reducing errors during computation,” he told TechNewsWorld.

Qubits, the basic information unit in quantum computing, are extremely sensitive to their environment. Any disturbances around them can cause them to lose their quantum properties, which is called decoherence. Maintaining qubit stability — or coherence — long enough to perform useful computations has been a significant challenge for developers.

Decoherence also makes quantum computers error-prone, which is why Google’s announcement is so important. Effective error correction is essential to the development of a practical quantum computer.

“Willow marks an important milestone on the journey toward fault-tolerant quantum computing,” said Rebecca Krauthamer, CEO of QuSecure, a maker of quantum-safe security solutions in San Mateo, Calif.

“It’s a step closer to making quantum systems commercially viable,” she told TechNewsWorld.

In a company blog, Google Vice President of Engineering Hartmut Neven explained that researchers tested ever-larger arrays of physical qubits, scaling up from a grid of 3×3 encoded qubits, to a grid of 5×5, to a grid of 7×7. With each advance, they cut the error rate in half. “In other words, we achieved an exponential reduction in the error rate,” he wrote.

“This historic accomplishment is known in the field as ‘below threshold’ — being able to drive errors down while scaling up the number of qubits,” he continued.

“The machines are very sensitive, and noise builds up both from any external influence as well as from use itself,” said Simon Fried, vice president for business development and marketing at Classiq, a developer of software for quantum computers, in Tel Aviv, Israel.

“Being able to minimize noise or compensate for it makes it possible to run longer, more complex programs,” he told TechNewsWorld.

“This is significant progress in terms of chip tech because of the inherent stability of the hardware as well as its ability to control noise,” he added.

Neven also noted that as the first system below the threshold, this is the most convincing prototype for a scalable logical qubit built to date. “It’s a strong sign that useful, very large quantum computers can indeed be built,” he wrote. “Willow brings us closer to running practical, commercially-relevant algorithms that can’t be replicated on conventional computers.”

Google also released data on Willow’s performance based on a common quantum computer test known as the random circuit sampling (RCS) benchmark. “[I]t checks whether a quantum computer is doing something that couldn’t be done on a classical computer,” Neven explained. “Any team building a quantum computer should check first if it can beat classical computers on RCS; otherwise, there is strong reason for skepticism that it can tackle more complex quantum tasks.”

Neven called Willow’s performance on the RCS benchmark “astonishing.” It performed a computation in under five minutes that would take one of today’s fastest supercomputers 10 septillion years — that’s 10 followed by 25 zeroes.

“This mind-boggling number exceeds known timescales in physics and vastly exceeds the age of the universe,” he wrote. “It lends credence to the notion that quantum computation occurs in many parallel universes, in line with the idea that we live in a multiverse.”

Chris Hickman, chief security officer at Keyfactor, a digital identity management company in Cleveland, hailed Willow as “a significant milestone in quantum computing” but cautioned that Willow’s advanced quantum error correction brings the field closer to practical quantum applications, signaling that businesses need to prioritize preparation for the inevitable disruption of quantum computing in areas like encryption and security.

“While this development doesn’t immediately alter the expected timeline for quantum computers to break current encryption standards, it reinforces the idea that progress towards this milestone is accelerating,” he told TechNewsWorld.

“Practical use cases for quantum computers go beyond applications that stand to benefit businesses,” he said. “Bad actors will inevitably leverage the technology for their own nefarious benefit.”

“Hackers will leverage quantum computers to decrypt sensitive information, rendering legacy cryptographic methods obsolete,” he continued. “These include algorithms like RSA and ECC, which are currently considered unbreakable.”

Karl Holmqvist, founder and CEO of Lastwall, a provider of identity-centric and quantum-resilient technologies, in Mountain View, Calif., agreed that the rate of development of cryptographically relevant quantum computers is accelerating. “But I also understand that there are skeptics who think development is not as close as it seems or that it may never arrive,” he told TechNewsWorld.

“So, my question to everyone is this: Given that we will either deploy quantum-resilient solutions too early or too late, which scenario carries more risk?” he asked. “Would you rather understand the implications of post-quantum cryptographic deployments, test them in your environments, and be prepared to rapidly deploy when needed — or risk losing your secrets?”

In his blog, Neven also revealed why he changed his focus from artificial intelligence to quantum computing. “My answer is that both will prove to be the most transformational technologies of our time, but advanced AI will significantly benefit from access to quantum computing,” he wrote.

Quantum computing is inherently designed to tackle complex problems, so it could be very helpful with the development of AI, noted Edward Tian, CEO of GPTZero, maker of an AI detection platform in Arlington, Va. “However, we have seen instances of classical AI still being the better method,” he told TechNewsWorld.

“I came out of AI and entered the quantum computing world specifically because of the promise quantum computing has to unlock doors that remain shut in a classical computing world,” added QuSecure’s Krauthamer.

However, she had a word of caution about the technology. “A quantum computer is not simply a bigger, faster, more powerful computer,” she said. “It thinks in a fundamentally different way and, therefore, will solve different types of problems than we can today. It is wise to be skeptical if quantum computing is presented as a cure-all for challenging computation tasks.”

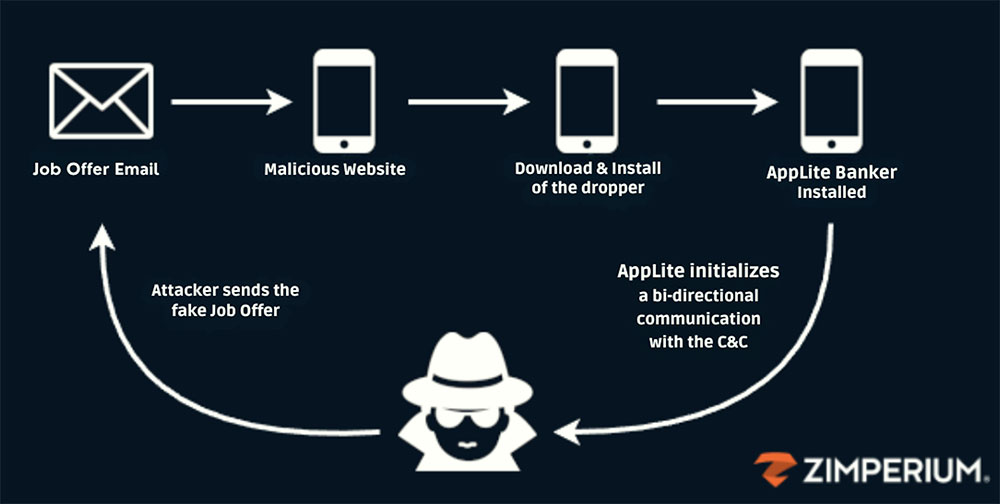

A sophisticated mobile phishing campaign targeting job seekers intended to install dangerous malicious software on their phones was revealed Tuesday by security researchers.

The campaign discovered by Zimperium zLabs targets Android mobile phones and aims to distribute a variant of the Antidot banking trojan that the researchers have dubbed AppLite Banker.

“The AppLite banking trojan’s ability to steal credentials from critical applications like banking and cryptocurrency makes this scam highly dangerous,” said Jason Soroko, a senior fellow at Sectigo, a certificate lifecycle management provider in Scottsdale, Ariz.

“As mobile phishing continues to rise, it’s crucial for individuals to remain vigilant about unsolicited job offers and always verify the legitimacy of links before clicking,” he told TechNewsWorld.

“The AppLite banking trojan requires permissions through the phone’s accessibility features,” added James McQuiggan, a security awareness advocate at KnowBe4, a security awareness training provider in Clearwater, Fla.

“If the user is unaware,” he told TechNewsWorld, “they can allow full control over their device, making personal data, GPS location, and other information available for the cybercriminals.”

In a blog on Zimperium’s website, researcher Vishnu Pratapagiri explained that attackers present themselves as recruiters, luring unsuspecting victims with job offers. As part of their fraudulent hiring process, he continued, the phishing campaign tricks victims into downloading a malicious application that acts as a dropper, eventually installing AppLite.

“The attackers behind this phishing campaign demonstrated a remarkable level of adaptability, leveraging diverse and sophisticated social engineering strategies to target their victims,” Pratapagiri wrote.

A key tactic employed by the attackers involves masquerading as a job recruiter or HR representatives from well-known organizations, he continued. Victims are enticed to respond to fraudulent emails, carefully crafted to resemble authentic job offers or requests for additional information.

“People are desperate to get a job, so when they see remote work, good pay, good benefits, they text back,” noted Steve Levy, principal talent advisor with DHI Group, parent company of Dice, a career marketplace for candidates seeking technology-focused roles and employers looking to hire tech talent globally, in Centennial, Colo.

“That starts the snowball rolling,” he told TechNewsWorld. “It’s called pig butchering. Farmers will fatten a pig little by little, so when it’s time to cook it, they’re really big and juicy.”

After the initial communication, Pratapagiri explained that the threat actors direct victims to download a purported CRM Android application. While appearing legitimate, this application functions as a malicious dropper, facilitating the deployment of the primary payload onto the victim’s device.

Illustration of one of the methods employed to distribute and execute the AppLite malware on the victim’s mobile device. (Credit: Zimperium)

Stephen Kowski, field CTO at SlashNext, a computer and network security company in Pleasanton, Calif., noted that the AppLite campaign represents a sophisticated evolution of techniques first seen in Operation Dream Job, a global campaign run in 2023 by the infamous North Korean Lazarus group.

While the original Operation Dream Job used LinkedIn messages and malicious attachments to target job seekers in the defense and aerospace sectors, today’s attacks have expanded to exploit mobile vulnerabilities through fraudulent job application pages and banking trojans, he explained.

“The dramatic shift to mobile-first attacks is evidenced by the fact that 82% of phishing sites now specifically target mobile devices, with 76% using HTTPS to appear legitimate,” he told TechNewsWorld.

“The threat actors have refined their social engineering tactics, moving beyond simple document-based malware to deploy sophisticated mobile banking trojans that can steal credentials and compromise personal data, demonstrating how these campaigns continue to evolve and adapt to exploit new attack surfaces,” Kowski explained.

“Our internal data shows that users are four times more likely to click on malicious emails when using mobile devices compared to desktops,” added Mika Aalto, co-founder and CEO of Hoxhunt, a provider of enterprise security awareness solutions in Helsinki.

“What’s even more concerning is that mobile users tend to click on these malicious emails at an even larger rate during the late night hours or very early in the morning, which suggests that people are more vulnerable to attacks on mobile when their defenses are down,” he told TechNewsWorld. “Attackers are clearly aware of this and are continually evolving their tactics to exploit these vulnerabilities.”

This new wave of cyber scams underscores the evolving tactics used by cybercriminals to exploit job seekers who are motivated to make a prospective employer happy, observed Soroko.

“By capitalizing on individuals’ trust in legitimate-looking job offers, attackers can infect mobile devices with sophisticated malware that targets financial data,” he said. “The use of Android devices, in particular, highlights the growing trend of mobile-specific phishing campaigns.”

“Be careful what you sideload on an Android device,” he cautioned.

DHI’s Levy noted that attacks on job seekers aren’t limited to mobile phones. “I don’t think this is simply relegated to mobile phones,” he said. “We’re seeing this on all the social platforms. We’re seeing this on LinkedIn, Facebook, TikTok, and Instagram.”

“Not only are these scams common, they’re very insidious,” he declared. “They prey on the emotional situation of job seekers.”

“I probably get three to four of these text inquiries a week,” he continued. “They all go into my junk folder automatically. These are the new versions of the Nigerian prince emails that ask you to send them $1,000, and they’ll give you $10 million back.”

Beyond its ability to mimic enterprise companies, AppLite can also masquerade as Chrome and TikTok apps, demonstrating a wide range of target vectors, including full device takeover and application access.

“The level of access provided [to] the attackers could also include corporate credentials, application, and data if the device was used by the user for remote work or access for their existing employer,” Pratapagiri wrote.

“As mobile devices have become essential to business operations, securing them is crucial, especially to protect against the large variety of different types of phishing attacks, including these sophisticated mobile-targeted phishing attempts,” said Patrick Tiquet, vice president for security and architecture of Keeper Security, a password management and online storage company, in Chicago.

“Organizations should implement robust mobile device management policies, ensuring that both corporate-issued and BYOD devices comply with security standards,” he told TechNewsWorld. “Regular updates to both devices and security software will ensure that vulnerabilities are promptly patched, safeguarding against known threats that target mobile users.”

Aalto also recommended the adoption of human risk management (HRM) platforms to tackle the growing sophistication of mobile phishing attacks.

“When a new attack is reported by an employee, the HRM platform learns to automatically find future similar attacks,” he said. “By integrating HRM, organizations can create a more resilient security culture where users become active defenders against mobile phishing and smishing attacks.”

Well, it’s that time of year again. Like you, I’m working through the gift-giving part of the holidays. I used to send out Amazon gift cards until one was stolen. I didn’t get sympathy or a refund from Amazon, so I’ve decided to steer clear of store gift cards altogether. So, I’m back to sending people things I think they might want, and this week, I’ll share my list of tech gadgets that make great gifts.

I’ll close with my last Product of the Week for 2024, a new off-road electric motorcycle from Dust Moto due out next year that is being built almost in my backyard.

Do you have a special lady in your life? Wife, sister, mother, daughter or girlfriend? Well, the best, but far from the cheapest, hair dryer I’ve found is the Dyson Supersonic. It’s normally priced around $430, but I found it on sale for $329. I got one for my wife, and she loved it, so I got one for my sister this year, but don’t tell her!

This hair dryer is relatively small, which makes it easy to pack. It comes up to speed quickly and holds up well. We haven’t had any problems with ours so far.

The Supersonic uses Dyson’s unique design, which pulls the air from low in the handle, so it is unlikely to suck up your hair, and it has adjustments for every hair type, making it extremely flexible. It does come with a host of attachments, as is typical for anything Dyson-made. Don’t ask me to explain all the features because I don’t use them myself.

This is my gift idea for someone you care about because it is well-made and expensive. It’s unlikely they’d buy it for themselves, and they will likely think fondly of you when they dry their hair.

For the older folks in your life — parents, grandparents, uncles, or aunts — who might not be great with technology but who love family pictures, consider a digital picture frame you can preload with family pictures. When connected to the web, like the Nixplay digital touchscreen picture frame, you can update the picture playlist from the comfort of your couch once the device is set up.

Yes, you’ll have to make sure the recipient’s Wi-Fi is working, which is a good reason to visit rather than just sending the thing out. At 10.1 inches and for around $140 (the 15-inch is on sale for $244 right now), it won’t be too big for a bedside table, and it works with both iOS and Android phones.

Although they say a grandma could upload pictures to the frame, it’s better if you manage it yourself. Regularly updating the pictures turns the frame into an ongoing surprise and a thoughtful reminder that you’re thinking of them. Parents often struggle to feel connected after their kids move out, especially when those kids have their own families. This gift can help bridge that gap, serving as a simple but meaningful way to show you care.

I was in a terrible car accident last year where an airbag knocked me out and broke my back. Because I was hit so hard, I have little memory of the accident, but my 70mai Dash Cam Omni caught the entire event, so I was able to go back and learn from it.

What makes this dash cam different is that it has a head like R2D2 that swivels, so you don’t have to have a rear-view camera. It will follow the action as it moves from side to side, capturing the entire event. It will also record people who mess with your car while it is parked. While it isn’t as good as what Teslas typically ship with, for those of us who don’t drive a Tesla, this is a great way to record your drive.

Image Credit: 70mai

It can also get you out of bogus tickets. I got one of those a few years back where the officer said I was doing 90 on an open road. I wasn’t, and I was in heavy traffic. It was my word against his, and he won. If I’d had the camera back then, it would have paid for itself with that one ticket.

This dash cam can also reinforce the need to drive more safely, given the record you are creating can be used against you. If you have kids who borrow your car, you might want to consider this to keep them from doing stupid things. At $200, it’s one of the more reasonable dash cams out there.

Oh, and there are a lot of insurance scammers out there who will back into your car and scream that you rear-ended them or throw themselves in front of your vehicle, claiming you didn’t yield. This camera can help you get out of those situations as well.

Living in Bend, Oregon, where winters can be harsh, I’ve come to appreciate heated gear. The VolteX Heated Scarf is one of the easiest self-heated items in the market.

At under $17, this is a handy gift for those on a budget. You have to charge it up, but it heats almost instantly once turned on and has three heat settings (just like my heated car seats).

The scarf is fluffy, so it feels really good on your neck. I bought one for my wife this year because she hates the cold. She can leave it in the car plugged into the car’s electric system, so it’s always ready for the cold when a little instant warmth is appreciated.

It is very portable, so you can travel with it, though since it has a battery, you’ll need to carry it onboard and not leave it in your luggage. It comes in brown, black, gray, or white. I ended up getting two in different colors since I’m going to want to wear one as well.

Things are getting a bit crazy, given all of the political tension in the air. I’ve been in several situations where I wanted to record what was happening without experiencing the anger that usually comes when you use your smartphone to capture someone breaking the law or behaving badly. In addition, using an action camera to record videos often doesn’t provide the same view you have because the camera is located somewhere other than your face.

Ray-Ban Meta smart glasses have a great camera in them. They can also replace your earbuds if you like to walk and listen to music or audiobooks or have your messages read to you while you are doing something else like running. The glasses come in a variety of styles and will take prescription lenses.

Ray-Ban | Meta Smart Glasses (Video Credit: Meta)

The camera is well hidden, so you don’t have the concerns that the old Google Glass headset had. While the base prices currently range from $299 to $379, these glasses could be just what your loved one needs to capture that fleeting moment when something truly good or terribly bad is happening and you want to create a permanent record.

I hope this list helps you with your holiday shopping choices. Putting it together helped me make some of my own this year. I hope you have a marvelous holiday season!

Hightail all-electric dirt bike (Image Credit: Dust Moto)

While I don’t ride much anymore, I used to own a Suzuki 125 and a Yamaha 175. The 125 was my school motorcycle, and the 175 was offroad. The problem with gas bikes when you are riding in the wilderness is that they make a lot of noise, and they need RPMs for torque. This means you miss a lot of the natural sounds, and when you get into trouble, like on a steep hill, you can end up spinning your wheel while trying to build enough torque to climb it.

At an estimated $10,950 and with a release date of late 2025, the Dust Moto’s Hightail all-electric dirt bike is simply awesome. While not street legal, at least not yet, it is a monster of an alternative when it comes to riding off-road. It has an extremely clean design, and it was created by guys who also enjoy riding outdoors — we have a lot of that here in Oregon. Their experience shows in the design and execution of this bike.

With 42 HP and 660 Nm of torque, it will run for up to two hours on a charge. Plus, it has a replaceable battery, so you could carry a spare. It was created with support from Bloom, a company specializing in electric motorcycles, so even though this is Dust Moto’s first bike, it isn’t Bloom’s first, so you can be confident this bike will do all it claims and more.

Designed within walking distance of me, this would be on my Christmas shortlist for 2025 if I were still riding. As a result, the Hightail all-electric dirt bike by Dust Moto is my last Product of the Week for 2024, even though it won’t be on my Christmas list until 2025, when this bike will be available.

AI-driven systems have become prime targets for sophisticated cyberattacks, exposing critical vulnerabilities across industries. As organizations increasingly embed AI and machine learning (ML) into their operations, the stakes for securing these systems have never been higher. From data poisoning to adversarial attacks that can mislead AI decision-making, the challenge extends across the entire AI/ML lifecycle.

In response to these threats, a new discipline, machine learning security operations (MLSecOps), has emerged to provide a foundation for robust AI security. Let’s explore five foundational categories within MLSecOps.

AI systems rely on a vast ecosystem of commercial and open-source tools, data, and ML components, often sourced from multiple vendors and developers. If not properly secured, each element within the AI software supply chain, whether it’s datasets, pre-trained models, or development tools, can be exploited by malicious actors.

The SolarWinds hack, which compromised multiple government and corporate networks, is a well-known example. Attackers infiltrated the software supply chain, embedding malicious code into widely used IT management software. Similarly, in the AI/ML context, an attacker could inject corrupted data or tampered components into the supply chain, potentially compromising the entire model or system.

To mitigate these risks, MLSecOps emphasizes thorough vetting and continuous monitoring of the AI supply chain. This approach includes verifying the origin and integrity of ML assets, especially third-party components, and implementing security controls at every phase of the AI lifecycle to ensure no vulnerabilities are introduced into the environment.

In the world of AI/ML, models are often shared and reused across different teams and organizations, making model provenance — how an ML model was developed, the data it used, and how it evolved — a key concern. Understanding model provenance helps track changes to the model, identify potential security risks, monitor access, and ensure that the model performs as expected.

Open-source models from platforms like Hugging Face or Model Garden are widely used due to their accessibility and collaborative benefits. However, open-source models also introduce risks, as they may contain vulnerabilities that bad actors can exploit once they are introduced to a user’s ML environment.

MLSecOps best practices call for maintaining a detailed history of each model’s origin and lineage, including an AI-Bill of Materials, or AI-BOM, to safeguard against these risks.

By implementing tools and practices for tracking model provenance, organizations can better understand their models’ integrity and performance and guard against malicious manipulation or unauthorized changes, including but not limited to insider threats.

Strong GRC measures are essential for ensuring responsible and ethical AI development and use. GRC frameworks provide oversight and accountability, guiding the development of fair, transparent, and accountable AI-powered technologies.

The AI-BOM is a key artifact for GRC. It is essentially a comprehensive inventory of an AI system’s components, including ML pipeline details, model and data dependencies, license risks, training data and its origins, and known or unknown vulnerabilities. This level of insight is crucial because one cannot secure what one does not know exists.

An AI-BOM provides the visibility needed to safeguard AI systems from supply chain vulnerabilities, model exploitation, and more. This MLSecOps-supported approach offers several key advantages, like enhanced visibility, proactive risk mitigation, regulatory compliance, and improved security operations.

In addition to maintaining transparency through AI-BOMs, MLSecOps best practices should include regular audits to evaluate the fairness and bias of models used in high-risk decision-making systems. This proactive approach helps organizations comply with evolving regulatory requirements and build public trust in their AI technologies.

AI’s growing influence on decision-making processes makes trustworthiness a key consideration in the development of machine learning systems. In the context of MLSecOps, trusted AI represents a critical category focused on ensuring the integrity, security, and ethical considerations of AI/ML throughout its lifecycle.

Trusted AI emphasizes the importance of transparency and explainability in AI/ML, aiming to create systems that are understandable to users and stakeholders. By prioritizing fairness and striving to mitigate bias, trusted AI complements broader practices within the MLSecOps framework.

The concept of trusted AI also supports the MLSecOps framework by advocating for continuous monitoring of AI systems. Ongoing assessments are necessary to maintain fairness, accuracy, and vigilance against security threats, ensuring that models remain resilient. Together, these priorities foster a trustworthy, equitable, and secure AI environment.

Within the MLSecOps framework, adversarial machine learning (AdvML) is a crucial category for those building ML models. It focuses on identifying and mitigating risks associated with adversarial attacks.

These attacks manipulate input data to deceive models, potentially leading to incorrect predictions or unexpected behavior that can compromise the effectiveness of AI applications. For example, subtle changes to an image fed into a facial recognition system could cause the model to misidentify the individual.

By incorporating AdvML strategies during the development process, builders can enhance their security measures to protect against these vulnerabilities, ensuring their models remain resilient and accurate under various conditions.

AdvML emphasizes the need for continuous monitoring and evaluation of AI systems throughout their lifecycle. Developers should implement regular assessments, including adversarial training and stress testing, to identify potential weaknesses in their models before they can be exploited.

By prioritizing AdvML practices, ML practitioners can proactively safeguard their technologies and reduce the risk of operational failures.

AdvML, alongside the other categories, demonstrates the critical role of MLSecOps in addressing AI security challenges. Together, these five categories highlight the importance of leveraging MLSecOps as a comprehensive framework to protect AI/ML systems against emerging and existing threats. By embedding security into every phase of the AI/ML lifecycle, organizations can ensure that their models are high-performing, secure, and resilient.

As a longtime user of the original Sonos Arc, I approached the new Sonos Arc Ultra with excitement and skepticism.

The original Arc has been a staple in my home entertainment setup. It delivers impressive Dolby Atmos sound and effortlessly integrates with the Sonos ecosystem.

With the Arc Ultra promising upgrades in sound quality, design, and connectivity, I was eager to see if it could live up to the hype and justify its higher price tag.

After spending time with the Ultra, it’s clear that Sonos hasn’t just refined its flagship soundbar; they’ve reimagined what a standalone audio system can offer. But is it enough to tempt existing Arc users like me to take the leap? Let’s dive in.

The Sonos Arc Ultra, released on Oct. 29, is Sonos’ latest flagship soundbar. It is priced at $999 and available in black or white.

This new release marks a slight price increase over its predecessor, the original Arc, which is discontinued and now being sold at discounted rates as retailers clear remaining stock.

The Arc Ultra enters a competitive market, facing rivals like the Sony Bravia Theatre Bar 9 and the Samsung HW-Q990D. Both offer compelling features and, at times, significant discounts.

Visually, the Arc Ultra closely resembles the original Arc, maintaining Sonos’ minimalist aesthetic with a perforated grille encompassing most of the chassis. However, subtle changes include a ledge at the back housing touch controls — play/pause, skip, volume slider, and a voice control button — relocated from the main grille.

The soundbar’s dimensions have been adjusted ever so slightly: it’s wider at 118cm (up from 114cm) but shorter in height at 7.5cm (down from 8.7cm), reducing the likelihood of obstructing the TV screen when placed in front. Weighing approximately 350g less than its predecessor, the Arc Ultra is also more wall-mount friendly.

The design requires an open placement, as positioning it in a nook or under a shelf can impede the upward-firing drivers essential for optimal sound dispersion.

The Arc Ultra boasts a 9.1.4-channel configuration, a significant upgrade from the original Arc’s 5.0.2 setup. It incorporates 14 custom-engineered drivers powered by 15 Class D amplifiers, including seven tweeters, six midrange woofers, and a novel Sound Motion woofer.

This innovative woofer utilizes four smaller, lightweight motors to move the cone, enabling greater air displacement and, according to Sonos, delivering up to twice the bass of the original Arc. The dual-cone design also aims to minimize mechanical vibrations, contributing to a more balanced sound profile.

Despite these advancements, the Arc Ultra lacks support for DTS audio formats, focusing solely on Dolby Atmos for spatial audio. Connectivity options remain limited, with a single HDMI eARC port and no dedicated HDMI inputs, necessitating all external sources being connected through the TV. This setup may pose challenges for users with multiple high-spec gaming devices and limited HDMI 2.1 ports on their TVs.

Sonos Arc Ultra home theater soundbar: front view (pictured top) and back view

On the upside, the Arc Ultra introduces Bluetooth connectivity (a first for Sonos soundbars) and expands Sonos’ excellent Trueplay calibration support to Android devices, enhancing user accessibility.

In terms of audio performance, the Arc Ultra delivers a clean, precise, and spacious soundstage with impressive three-dimensionality. The enhanced bass is deep and expressive, providing a solid foundation without overwhelming the overall sound profile.

Dialogue clarity has improved, thanks to the new front-firing speaker array dedicated to the center channel, ensuring crisp and intelligible speech reproduction. The soundbar excels in detail retrieval, capturing subtle nuances across various content types.

However, the absence of HDMI passthrough and DTS support may be limiting for some users. Additionally, while the Sonos app offers robust control and customization options, some users have reported occasional issues that could affect the overall user experience.

Compared to competitors like the Sony Bravia Theatre Bar 9 and the Samsung HW-Q990D, the Arc Ultra holds its ground in terms of sound quality and design.

Though officially priced higher, the Sony Bravia Theatre Bar 9 often sees discounts that bring it closer to the Arc Ultra’s price point. The Bravia Theatre Bar 9 boasts a comprehensive feature set, including HDMI passthrough and support for both Dolby Atmos and DTS:X formats, offering greater flexibility for users with diverse content sources.

While more expensive, the Samsung HW-Q990D includes a wireless subwoofer and surround speakers, delivering a more immersive surround sound experience out of the box. Its connectivity options are more extensive, featuring multiple HDMI inputs and support for various audio formats, making it a versatile choice for users seeking a comprehensive home theater setup.

To be sure, Sonos has long faced criticism for its app, which, while offering a sleek design and robust control options, has been plagued by occasional connectivity issues and limited flexibility.

Users often report frustrations with delayed updates, difficulty adding new devices, and problems syncing across the ecosystem. These woes are especially frustrating given the premium price of Sonos products, which sets high expectations for seamless integration.

Although recent updates have aimed to address some of these issues, the app experience still leaves room for improvement, particularly as competitors continue to refine their platforms. While I have suffered through some of these issues myself (particularly with Sonos’ terrific over-the-ears Ace headphones), the app has thankfully matured to the point that it didn’t inhibit setup.

Still, from a pure hardware standpoint, the Sonos Arc Ultra represents a significant advancement over its predecessor, offering enhanced bass performance, improved dialogue clarity, and a more immersive soundstage.